- Let's make Cloud ☁️

- Posts

- Let's make Cloud #55: Serverless OpenTelemetry at scale, Optimizing costs with GitHub Actions and AWS Fargate Spot, AI powered video summarizer

Let's make Cloud #55: Serverless OpenTelemetry at scale, Optimizing costs with GitHub Actions and AWS Fargate Spot, AI powered video summarizer

Serverless OpenTelemetry at scale, Optimizing costs with GitHub Actions and AWS Fargate Spot, AI powered video summarizer

Hello CloudMakers!

Today we shall see:

Serverless OpenTelemetry at scale

Optimizing costs with GitHub Actions and AWS Fargate Spot

AI powered video summarizer

Enjoy!

Serverless OpenTelemetry at scale: the PostNL context

This article explains how PostNL deals with the challenges of tracking and understanding the massive flow of data and events in their logistics processes. They use a self-service serverless message broker, letting engineers handle event integrations without much hassle.

I recommend reading the second part of the series as well. It explains two telemetry instrumentation approaches: automatic is quick and easy but might not provide specific details, while manual is about digging into the important business information. PostNL chose manual to better manage their event flows, and they also gives a detailed look at their reasons for this choice, complete with code examples.

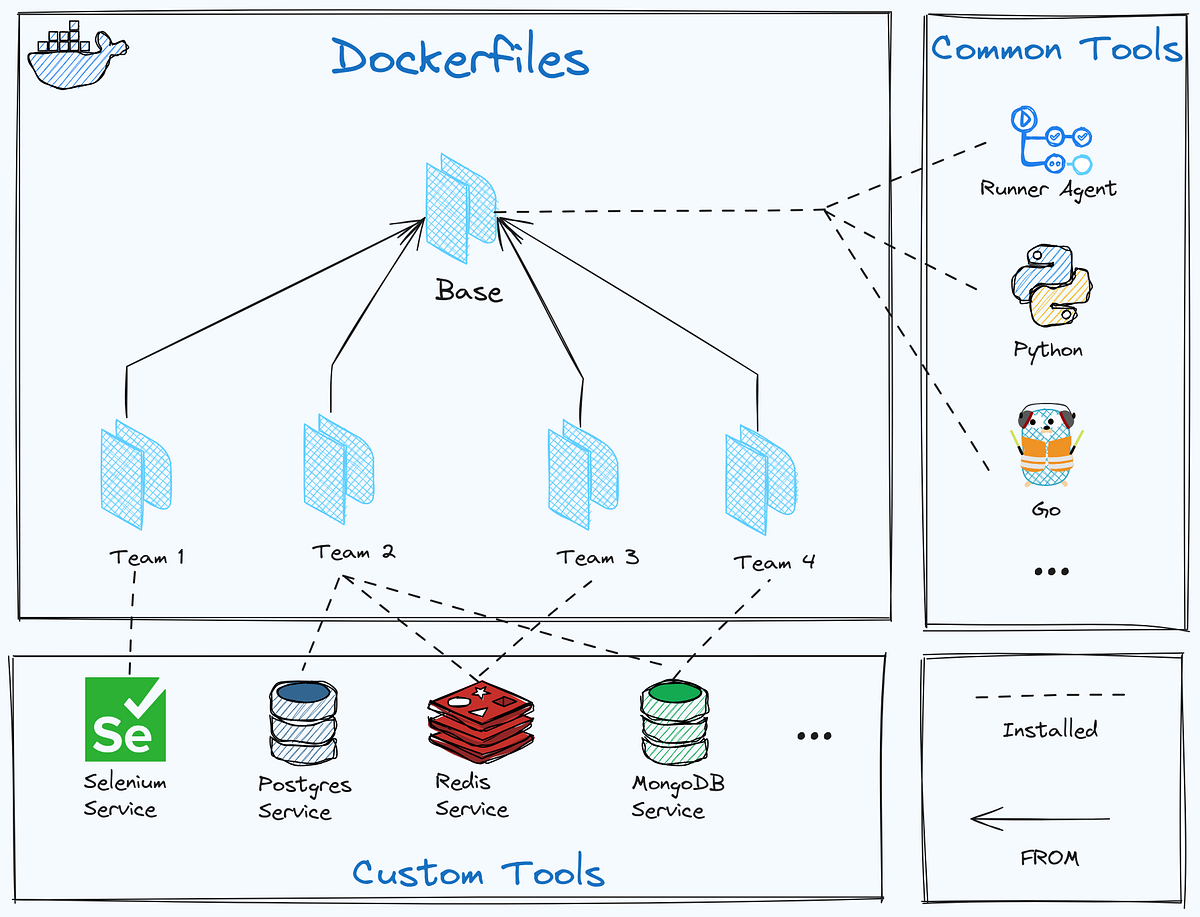

Optimizing costs with GitHub Actions and AWS Fargate Spot

The La Haus Platform team recently published this article detailing their efforts to improve CI workflow efficiency. They focused on reducing the duration of automated unit tests and achieved a 60% improvement in efficiency, along with a significant cost reduction of over 90%. The article highlights the role of AWS Fargate in enabling more cost-effective container execution. It also describes their use of GitHub Actions for automating workflows and how transitioning to parallel test execution, coupled with Fargate Spot instances, was crucial in achieving both time and cost savings in their development process.

AI powered video summarizer with Amazon Bedrock and Anthropic’s Claude

This article is about an interesting experiment where the author shows how to make a service that summarizes YouTube videos: It uses AI to turn video transcripts into short summaries and then reads them out loud using Amazon Polly. The whole thing runs on Anthropic's Claude 2.1 model and Amazon Bedrock, and it's all done without needing to manage servers. All the code is available, so anyone interested can try it out or tweak it.

Thank you for reading my newsletter!

If you liked it, please invite your friends to subscribe!

If you were forwarded this newsletter and liked it, you can subscribe for free here:

Have you read an article you liked and want to share it? Send it to me and you might see it published in this newsletter!

Interested in old issues? You can find them here!